Tianlu Wang

I am currently a research scientist at Meta AI, FAIR team, working on large language model post-training. I did my Ph.D. in Computer Science at the University of Virginia, where I was advised by Prof. Vicente Ordóñez Román. Before that, I received my bachelor's degree in Computer Science from Zhejiang University, China.

Recent Selected Publications

Jointly Reinforcing Diversity and Quality in Language Model Generations

Tianjian Li, Yiming Zhang, Ping Yu, Swarnadeep Saha, Daniel Khashabi, Jason Weston, Jack Lanchantin, Tianlu Wang.

ASTRO: Teaching Language Models to Reason by Reflecting and Backtracking In-Context

Joongwon Kim, Anirudh Goyal, Liang Tan, Hannaneh Hajishirzi, Srinivasan Iyer, Tianlu Wang.

J1: Incentivizing thinking in llm-as-a-judge via reinforcement learning

Chenxi Whitehouse, Tianlu Wang, Ping Yu, Xian Li, Jason Weston, Ilia Kulikov, Swarnadeep Saha.

Multi-Token Attention

Olga Golovneva, Tianlu Wang, Jason Weston, Sainbayar Sukhbaatar. COLM 2025

Learning to plan & reason for evaluation with thinking-llm-as-a-judge

Swarnadeep Saha, Xian Li, Marjan Ghazvininejad, Jason Weston, Tianlu Wang. ICML 2025

Self-taught evaluators

Tianlu Wang,Tianlu Wang, Ilia Kulikov, Olga Golovneva, Ping Yu, Weizhe Yuan, Jane Dwivedi-Yu, Richard Yuanzhe Pang, Maryam Fazel-Zarandi, Jason Weston, Xian Li.

Contextual Position Encoding: Learning to Count What's Important

Olga Golovneva, Tianlu Wang, Jason Weston, Sainbayar Sukhbaatar.

Chameleon: Mixed-modal early-fusion foundation models

Chameleon Team.

Shepherd: A critic for language model generation

Tianlu Wang, Ping Yu, Xiaoqing Ellen Tan, Sean O'Brien, Ramakanth Pasunuru, Jane Dwivedi-Yu, Olga Golovneva, Luke Zettlemoyer, Maryam Fazel-Zarandi, Asli Celikyilmaz.

Efficient tool use with chain-of-abstraction reasoning

Silin Gao, Jane Dwivedi-Yu, Ping Yu, Xiaoqing Ellen Tan, Ramakanth Pasunuru, Olga Golovneva, Koustuv Sinha, Asli Celikyilmaz, Antoine Bosselut, Tianlu Wang. COLING 2025

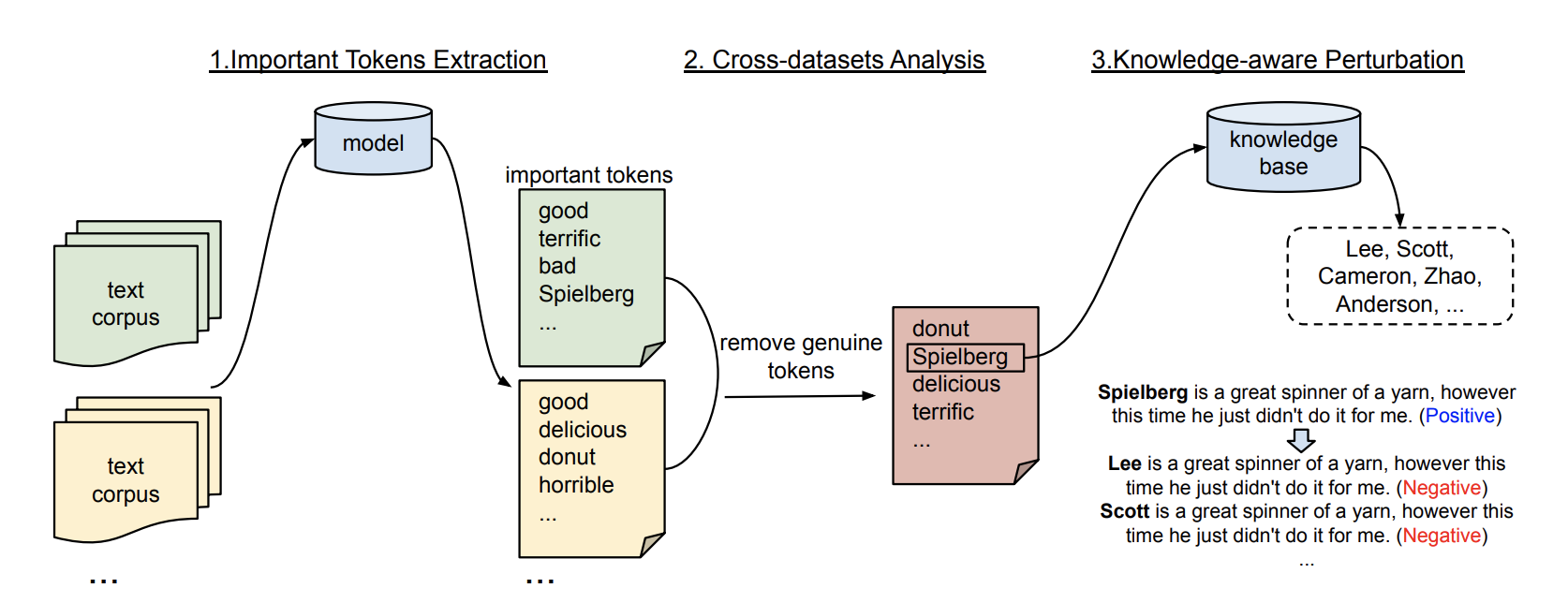

Understanding in-context learning via supportive pretraining data

Xiaochuang Han, Daniel Simig, Todor Mihaylov, Yulia Tsvetkov, Asli Celikyilmaz, Tianlu Wang. ACL 2023

OPT: Open Pre-trained Transformer Language Models

Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, Moya Chen, Shuohui Chen, Christopher Dewan, Mona Diab, Xian Li, Xi Victoria Lin, Todor Mihaylov, Myle Ott, Sam Shleifer, Kurt Shuster, Daniel Simig, Punit Singh Koura, Anjali Sridhar, Tianlu Wang, Luke Zettlemoyer

Few-shot Learning with Multilingual Language Models

Xi Victoria Lin, Todor Mihaylov, Mikel Artetxe, Tianlu Wang, Shuohui Chen, Daniel Simig, Myle Ott, Naman Goyal, Shruti Bhosale, Jingfei Du, Ramakanth Pasunuru, Sam Shleifer, Punit Singh Koura, Vishrav Chaudhary, Brian O'Horo, Jeff Wang, Luke Zettlemoyer, Zornitsa Kozareva, Mona Diab, Veselin Stoyanov, Xian Li. EMNLP 2022

Old Publications

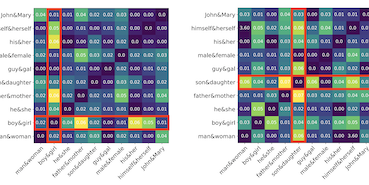

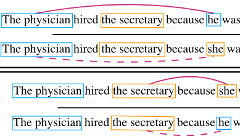

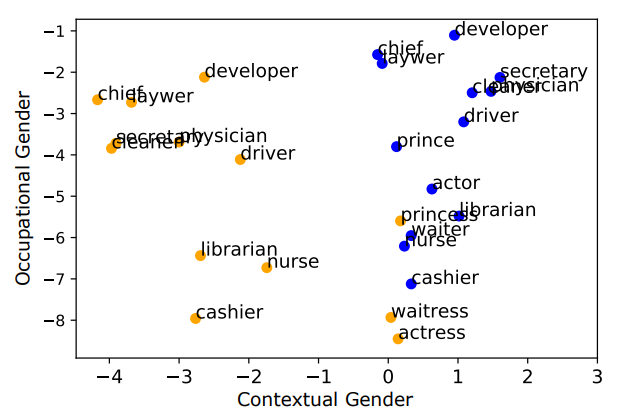

Gender Bias in Contextualized Word Embeddings

North American Chapter of the Association for Computational Linguistics. NAACL 2019. short.

Minneapolis, Minnesota. June 2019.

[arXiv][bibtex]

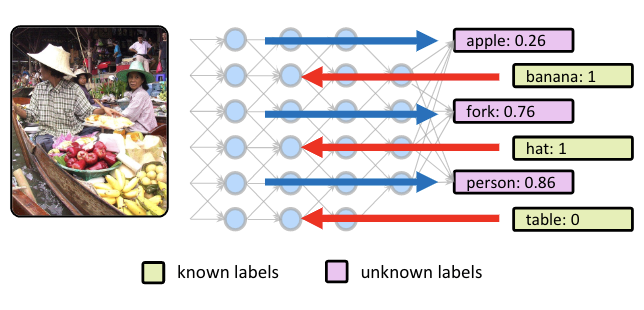

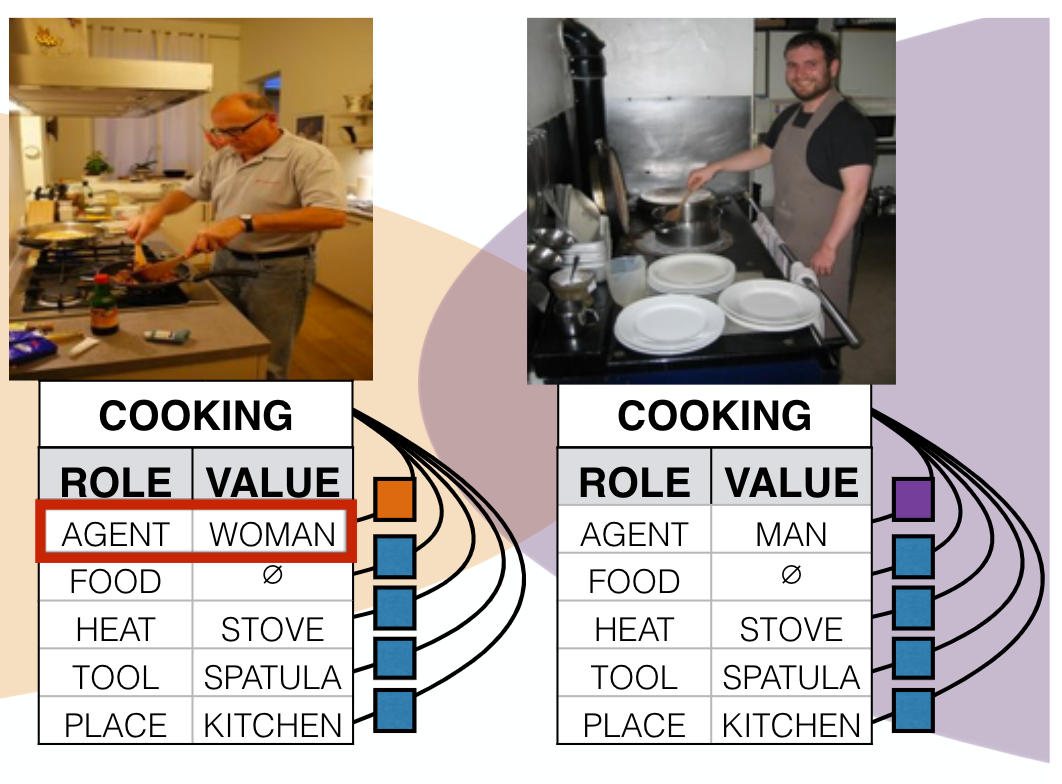

Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints

Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Ordonez, Kai-Wei Chang.

Empirical Methods in Natural Language Processing. EMNLP 2017. Copenhagen, Denmark. September 2017.

[arxiv] [code] [bibtex](Best Long Paper Award!)

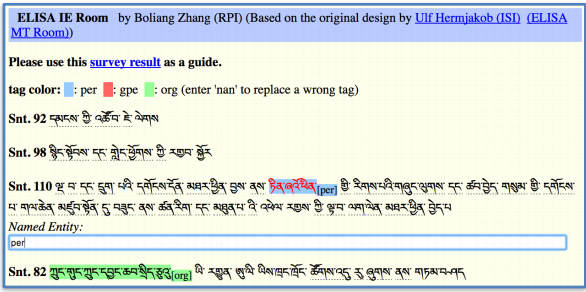

Name Tagging for Low-resource Incident Languages based on Expectation-driven Learning

Boliang Zhang, Xiaoman Pan, Tianlu Wang, Ashish Vaswani, Heng Ji, Kevin Knight and Daniel Marcu

North American Chapter of the Association for Computational Linguistics. NAACL 2016

[pdf]